LLaMA-7B

This repo contains a low-rank adapter for LLaMA-7b trained on Bulgarian dataset.

This model was introduced in this paper.

Model description

The training data is private Bulgarian dataset.

Intended uses & limitations

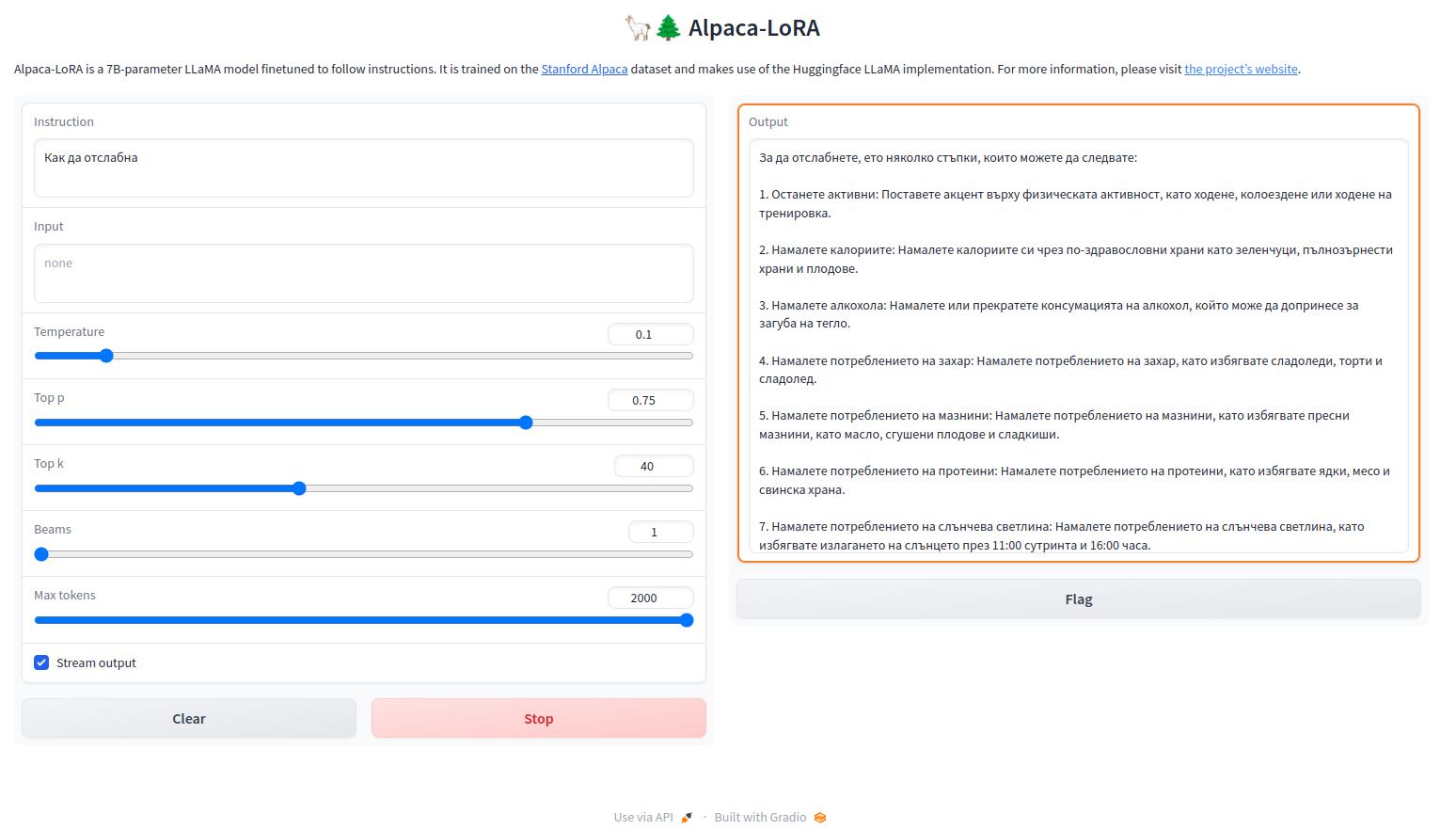

This is an instruction-based model similart to ChatGPT but in Bulgarian.

How to use

Here is how to use this model in PyTorch:

>>> git clone https://github.com/tloen/alpaca-lora.git

>>> cd alpaca-lora

>>> pip install -r requirements.txt

>>>

>>> python generate.py \

--load_8bit \

--base_model 'yahma/llama-7b-hf' \

--lora_weights 'rmihaylov/alpaca-lora-bg-7b' \

--share_gradio

This will download both a base model and an adapter from huggingface. Then it will run a gradio interface for chatting.

Example using this model: Colab. You need a Colab Pro because the model needs high ram when loading.