FUTGA: Fine-grained Music Understanding through Temporally-enhanced Generative Augmentation

News

- [07/28] We released FUTGA-7B and training/inference code based on SALMONN-7B backbone!

Overview

FUTGA is an audio LLM with fine-grained music understanding, learning from generative augmentation with temporal compositions. By leveraging existing music caption datasets and large language models (LLMs), we synthesize detailed music captions with structural descriptions and time boundaries for full-length songs. This synthetic dataset enables FUTGA to identify temporal changes at key transition points, their musical functions, and generate dense captions for full-length songs.

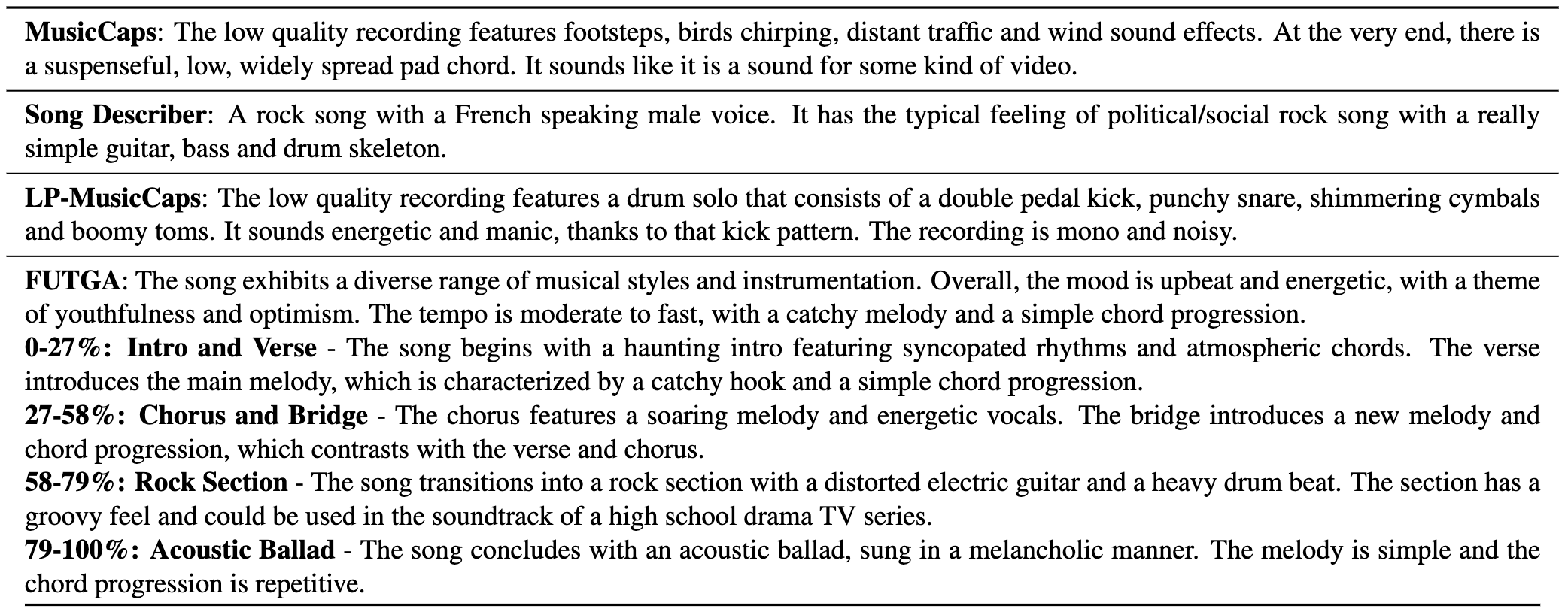

Comparing FUTGA dense captioning with MusicCaps/SongDescriber/LP-MusicCaps

How to load the model

We build FUTGA-7B based on SALMONN. Follow the instructions from SALMONN to load:

- whisper large v2 to

whisper_path, - Fine-tuned BEATs_iter3+ (AS2M) (cpt2) to

beats_path - vicuna 7B v1.5 to

vicuna_path, - FUTGA-7b to

ckpt_path.

Datasets

We generate dense captions for full-length songs in MusicCaps and SongDescriber,

where raw captions are directly generated from FUTGA-7B and seg_captions_features contain automatically segmented captions with structures and textual-audio features for each segment.