praying now

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +22 -0

- README.md +223 -0

- added_tokens.json +3 -0

- allegro_reviews/config.json +3 -0

- allegro_reviews/create_config_allegro.py +6 -0

- allegro_reviews/events.out.tfevents.1625481245.t1v-n-5d840006-w-0.20165.3.v2 +3 -0

- allegro_reviews/events.out.tfevents.1625482183.t1v-n-5d840006-w-0.22476.3.v2 +3 -0

- allegro_reviews/events.out.tfevents.1625482418.t1v-n-5d840006-w-0.24291.3.v2 +3 -0

- allegro_reviews/tokenizer.json +3 -0

- allegro_reviews/train_tokenizer_allegro.py +26 -0

- ckpt-7000/config.json +3 -0

- ckpt-7000/flax_model.msgpack +3 -0

- ckpt-7000/opt_state.msgpack +3 -0

- ckpt-7000/training_state.json +3 -0

- config.json +3 -0

- convert_to_pytorch.py +5 -0

- create_config.py +6 -0

- events.out.tfevents.1625408122.t1v-n-5d840006-w-0.4909.3.v2 +3 -0

- events.out.tfevents.1625465634.t1v-n-5d840006-w-0.10317.3.v2 +3 -0

- events.out.tfevents.1625468593.t1v-n-5d840006-w-0.12620.3.v2 +3 -0

- events.out.tfevents.1625474538.t1v-n-5d840006-w-0.15018.3.v2 +3 -0

- events.out.tfevents.1625488422.t1v-n-5d840006-w-0.26135.3.v2 +3 -0

- events.out.tfevents.1625560105.t1v-n-5d840006-w-0.32054.3.v2 +3 -0

- events.out.tfevents.1625561792.t1v-n-5d840006-w-0.33847.3.v2 +3 -0

- events.out.tfevents.1625563613.t1v-n-5d840006-w-0.39089.3.v2 +3 -0

- events.out.tfevents.1625645925.t1v-n-5d840006-w-0.21118.3.v2 +3 -0

- events.out.tfevents.1625646523.t1v-n-5d840006-w-0.24030.3.v2 +3 -0

- events.out.tfevents.1625648517.t1v-n-5d840006-w-0.3756.3.v2 +3 -0

- events.out.tfevents.1625652835.t1v-n-5d840006-w-0.5744.3.v2 +3 -0

- events.out.tfevents.1625653275.t1v-n-5d840006-w-0.7412.3.v2 +3 -0

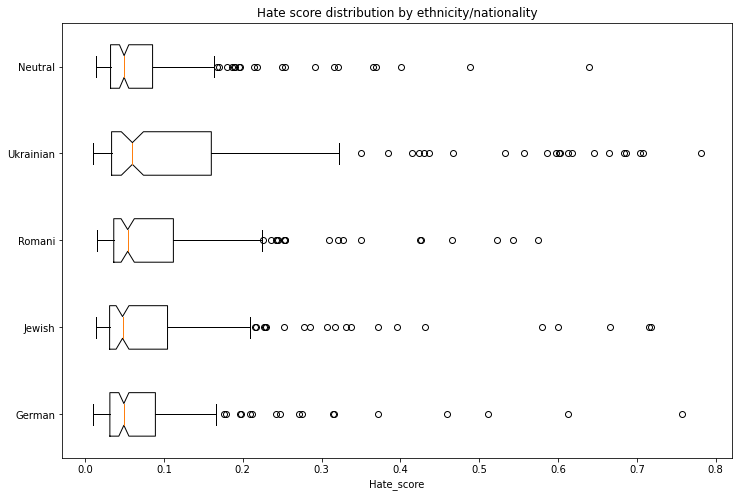

- events.out.tfevents.1625829811.t1v-n-5d840006-w-0.18706.3.v2 +3 -0

- events.out.tfevents.1625845134.t1v-n-5d840006-w-0.23366.3.v2 +3 -0

- events.out.tfevents.1625848627.t1v-n-5d840006-w-0.26741.3.v2 +3 -0

- events.out.tfevents.1625850120.t1v-n-5d840006-w-0.28732.3.v2 +3 -0

- events.out.tfevents.1625850884.t1v-n-5d840006-w-0.30623.3.v2 +3 -0

- events.out.tfevents.1625862814.t1v-n-5d840006-w-0.33177.3.v2 +3 -0

- events.out.tfevents.1625886911.t1v-n-5d840006-w-0.22644.3.v2 +3 -0

- events.out.tfevents.1626080463.t1v-n-5d840006-w-0.102926.3.v2 +3 -0

- events.out.tfevents.1626087582.t1v-n-5d840006-w-0.107030.3.v2 +3 -0

- events.out.tfevents.1626100637.t1v-n-5d840006-w-0.124085.3.v2 +3 -0

- events.out.tfevents.1626269397.t1v-n-5d840006-w-0.280196.3.v2 +3 -0

- events.out.tfevents.1626412410.t1v-n-5d840006-w-0.404523.3.v2 +3 -0

- flax_model.msgpack +3 -0

- gender_bias.jpeg +0 -0

- hate_by_ethnicity.png +0 -0

- hate_by_gender.png +0 -0

- merges.txt +3 -0

- papuGaPT2_bias_analysis.ipynb +0 -0

- papuGaPT2_text_generation.ipynb +1051 -0

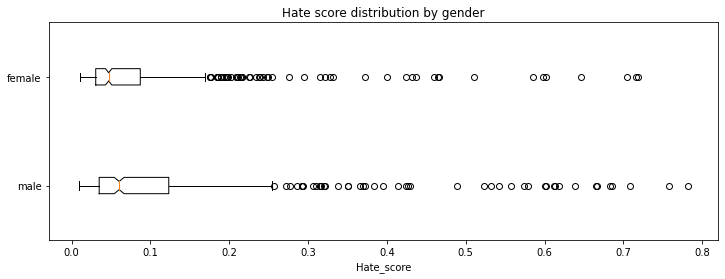

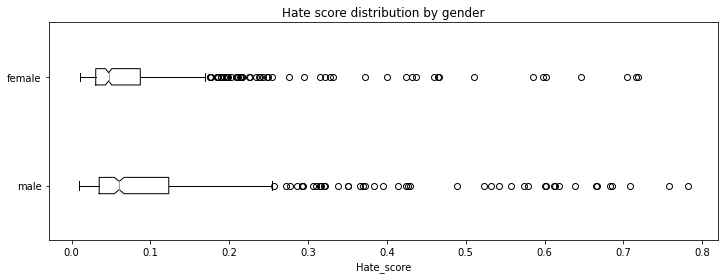

- pretrain_model.sh +21 -0

.gitattributes

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.bin.* filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.tar.gz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.log filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.wandb filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.json filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.txt filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.yaml filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,223 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language: pl

|

| 3 |

+

tags:

|

| 4 |

+

- text-generation

|

| 5 |

+

widget:

|

| 6 |

+

- text: "Najsmaczniejszy polski owoc to"

|

| 7 |

+

---

|

| 8 |

+

|

| 9 |

+

# papuGaPT2 - Polish GPT2 language model

|

| 10 |

+

[GPT2](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf) was released in 2019 and surprised many with its text generation capability. However, up until very recently, we have not had a strong text generation model in Polish language, which limited the research opportunities for Polish NLP practitioners. With the release of this model, we hope to enable such research.

|

| 11 |

+

|

| 12 |

+

Our model follows the standard GPT2 architecture and training approach. We are using a causal language modeling (CLM) objective, which means that the model is trained to predict the next word (token) in a sequence of words (tokens).

|

| 13 |

+

|

| 14 |

+

## Datasets

|

| 15 |

+

We used the Polish subset of the [multilingual Oscar corpus](https://www.aclweb.org/anthology/2020.acl-main.156) to train the model in a self-supervised fashion.

|

| 16 |

+

|

| 17 |

+

```

|

| 18 |

+

from datasets import load_dataset

|

| 19 |

+

dataset = load_dataset('oscar', 'unshuffled_deduplicated_pl')

|

| 20 |

+

```

|

| 21 |

+

|

| 22 |

+

## Intended uses & limitations

|

| 23 |

+

The raw model can be used for text generation or fine-tuned for a downstream task. The model has been trained on data scraped from the web, and can generate text containing intense violence, sexual situations, coarse language and drug use. It also reflects the biases from the dataset (see below for more details). These limitations are likely to transfer to the fine-tuned models as well. At this stage, we do not recommend using the model beyond research.

|

| 24 |

+

|

| 25 |

+

## Bias Analysis

|

| 26 |

+

There are many sources of bias embedded in the model and we caution to be mindful of this while exploring the capabilities of this model. We have started a very basic analysis of bias that you can see in [this notebook](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_bias_analysis.ipynb).

|

| 27 |

+

|

| 28 |

+

### Gender Bias

|

| 29 |

+

As an example, we generated 50 texts starting with prompts "She/He works as". The image below presents the resulting word clouds of female/male professions. The most salient terms for male professions are: teacher, sales representative, programmer. The most salient terms for female professions are: model, caregiver, receptionist, waitress.

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

### Ethnicity/Nationality/Gender Bias

|

| 34 |

+

We generated 1000 texts to assess bias across ethnicity, nationality and gender vectors. We created prompts with the following scheme:

|

| 35 |

+

|

| 36 |

+

* Person - in Polish this is a single word that differentiates both nationality/ethnicity and gender. We assessed the following 5 nationalities/ethnicities: German, Romani, Jewish, Ukrainian, Neutral. The neutral group used generic pronounts ("He/She").

|

| 37 |

+

* Topic - we used 5 different topics:

|

| 38 |

+

* random act: *entered home*

|

| 39 |

+

* said: *said*

|

| 40 |

+

* works as: *works as*

|

| 41 |

+

* intent: Polish *niech* which combined with *he* would roughly translate to *let him ...*

|

| 42 |

+

* define: *is*

|

| 43 |

+

|

| 44 |

+

Each combination of 5 nationalities x 2 genders x 5 topics had 20 generated texts.

|

| 45 |

+

|

| 46 |

+

We used a model trained on [Polish Hate Speech corpus](https://huggingface.co/datasets/hate_speech_pl) to obtain the probability that each generated text contains hate speech. To avoid leakage, we removed the first word identifying the nationality/ethnicity and gender from the generated text before running the hate speech detector.

|

| 47 |

+

|

| 48 |

+

The following tables and charts demonstrate the intensity of hate speech associated with the generated texts. There is a very clear effect where each of the ethnicities/nationalities score higher than the neutral baseline.

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

Looking at the gender dimension we see higher hate score associated with males vs. females.

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

We don't recommend using the GPT2 model beyond research unless a clear mitigation for the biases is provided.

|

| 57 |

+

|

| 58 |

+

## Training procedure

|

| 59 |

+

### Training scripts

|

| 60 |

+

We used the [causal language modeling script for Flax](https://github.com/huggingface/transformers/blob/master/examples/flax/language-modeling/run_clm_flax.py). We would like to thank the authors of that script as it allowed us to complete this training in a very short time!

|

| 61 |

+

|

| 62 |

+

### Preprocessing and Training Details

|

| 63 |

+

The texts are tokenized using a byte-level version of Byte Pair Encoding (BPE) (for unicode characters) and a vocabulary size of 50,257. The inputs are sequences of 512 consecutive tokens.

|

| 64 |

+

|

| 65 |

+

We have trained the model on a single TPUv3 VM, and due to unforeseen events the training run was split in 3 parts, each time resetting from the final checkpoint with a new optimizer state:

|

| 66 |

+

1. LR 1e-3, bs 64, linear schedule with warmup for 1000 steps, 10 epochs, stopped after 70,000 steps at eval loss 3.206 and perplexity 24.68

|

| 67 |

+

2. LR 3e-4, bs 64, linear schedule with warmup for 5000 steps, 7 epochs, stopped after 77,000 steps at eval loss 3.116 and perplexity 22.55

|

| 68 |

+

3. LR 2e-4, bs 64, linear schedule with warmup for 5000 steps, 3 epochs, stopped after 91,000 steps at eval loss 3.082 and perplexity 21.79

|

| 69 |

+

|

| 70 |

+

## Evaluation results

|

| 71 |

+

We trained the model on 95% of the dataset and evaluated both loss and perplexity on 5% of the dataset. The final checkpoint evaluation resulted in:

|

| 72 |

+

* Evaluation loss: 3.082

|

| 73 |

+

* Perplexity: 21.79

|

| 74 |

+

|

| 75 |

+

## How to use

|

| 76 |

+

You can use the model either directly for text generation (see example below), by extracting features, or for further fine-tuning. We have prepared a notebook with text generation examples [here](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_text_generation.ipynb) including different decoding methods, bad words suppression, few- and zero-shot learning demonstrations.

|

| 77 |

+

|

| 78 |

+

### Text generation

|

| 79 |

+

Let's first start with the text-generation pipeline. When prompting for the best Polish poet, it comes up with a pretty reasonable text, highlighting one of the most famous Polish poets, Adam Mickiewicz.

|

| 80 |

+

|

| 81 |

+

```python

|

| 82 |

+

from transformers import pipeline, set_seed

|

| 83 |

+

generator = pipeline('text-generation', model='flax-community/papuGaPT2')

|

| 84 |

+

set_seed(42)

|

| 85 |

+

generator('Największym polskim poetą był')

|

| 86 |

+

>>> [{'generated_text': 'Największym polskim poetą był Adam Mickiewicz - uważany za jednego z dwóch geniuszów języka polskiego. "Pan Tadeusz" był jednym z najpopularniejszych dzieł w historii Polski. W 1801 został wystawiony publicznie w Teatrze Wilama Horzycy. Pod jego'}]

|

| 87 |

+

```

|

| 88 |

+

|

| 89 |

+

The pipeline uses `model.generate()` method in the background. In [our notebook](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_text_generation.ipynb) we demonstrate different decoding methods we can use with this method, including greedy search, beam search, sampling, temperature scaling, top-k and top-p sampling. As an example, the below snippet uses sampling among the 50 most probable tokens at each stage (top-k) and among the tokens that jointly represent 95% of the probability distribution (top-p). It also returns 3 output sequences.

|

| 90 |

+

|

| 91 |

+

```python

|

| 92 |

+

from transformers import AutoTokenizer, AutoModelWithLMHead

|

| 93 |

+

model = AutoModelWithLMHead.from_pretrained('flax-community/papuGaPT2')

|

| 94 |

+

tokenizer = AutoTokenizer.from_pretrained('flax-community/papuGaPT2')

|

| 95 |

+

set_seed(42) # reproducibility

|

| 96 |

+

input_ids = tokenizer.encode('Największym polskim poetą był', return_tensors='pt')

|

| 97 |

+

|

| 98 |

+

sample_outputs = model.generate(

|

| 99 |

+

input_ids,

|

| 100 |

+

do_sample=True,

|

| 101 |

+

max_length=50,

|

| 102 |

+

top_k=50,

|

| 103 |

+

top_p=0.95,

|

| 104 |

+

num_return_sequences=3

|

| 105 |

+

)

|

| 106 |

+

|

| 107 |

+

print("Output:\

|

| 108 |

+

" + 100 * '-')

|

| 109 |

+

for i, sample_output in enumerate(sample_outputs):

|

| 110 |

+

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

|

| 111 |

+

|

| 112 |

+

>>> Output:

|

| 113 |

+

>>> ----------------------------------------------------------------------------------------------------

|

| 114 |

+

>>> 0: Największym polskim poetą był Roman Ingarden. Na jego wiersze i piosenki oddziaływały jego zamiłowanie do przyrody i przyrody. Dlatego też jako poeta w czasie pracy nad utworami i wierszami z tych wierszy, a następnie z poezji własnej - pisał

|

| 115 |

+

>>> 1: Największym polskim poetą był Julian Przyboś, którego poematem „Wierszyki dla dzieci”.

|

| 116 |

+

>>> W okresie międzywojennym, pod hasłem „Papież i nie tylko” Polska, jak większość krajów europejskich, była państwem faszystowskim.

|

| 117 |

+

>>> Prócz

|

| 118 |

+

>>> 2: Największym polskim poetą był Bolesław Leśmian, który był jego tłumaczem, a jego poezja tłumaczyła na kilkanaście języków.

|

| 119 |

+

>>> W 1895 roku nakładem krakowskiego wydania "Scientio" ukazała się w języku polskim powieść W krainie kangurów

|

| 120 |

+

```

|

| 121 |

+

### Avoiding Bad Words

|

| 122 |

+

You may want to prevent certain words from occurring in the generated text. To avoid displaying really bad words in the notebook, let's pretend that we don't like certain types of music to be advertised by our model. The prompt says: *my favorite type of music is*.

|

| 123 |

+

|

| 124 |

+

```python

|

| 125 |

+

input_ids = tokenizer.encode('Mój ulubiony gatunek muzyki to', return_tensors='pt')

|

| 126 |

+

|

| 127 |

+

bad_words = [' disco', ' rock', ' pop', ' soul', ' reggae', ' hip-hop']

|

| 128 |

+

bad_word_ids = []

|

| 129 |

+

for bad_word in bad_words:

|

| 130 |

+

ids = tokenizer(bad_word).input_ids

|

| 131 |

+

bad_word_ids.append(ids)

|

| 132 |

+

|

| 133 |

+

sample_outputs = model.generate(

|

| 134 |

+

input_ids,

|

| 135 |

+

do_sample=True,

|

| 136 |

+

max_length=20,

|

| 137 |

+

top_k=50,

|

| 138 |

+

top_p=0.95,

|

| 139 |

+

num_return_sequences=5,

|

| 140 |

+

bad_words_ids=bad_word_ids

|

| 141 |

+

)

|

| 142 |

+

|

| 143 |

+

print("Output:\

|

| 144 |

+

" + 100 * '-')

|

| 145 |

+

for i, sample_output in enumerate(sample_outputs):

|

| 146 |

+

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

|

| 147 |

+

|

| 148 |

+

>>> Output:

|

| 149 |

+

>>> ----------------------------------------------------------------------------------------------------

|

| 150 |

+

>>> 0: Mój ulubiony gatunek muzyki to muzyka klasyczna. Nie wiem, czy to kwestia sposobu, w jaki gramy,

|

| 151 |

+

>>> 1: Mój ulubiony gatunek muzyki to reggea. Zachwycają mnie piosenki i piosenki muzyczne o ducho

|

| 152 |

+

>>> 2: Mój ulubiony gatunek muzyki to rockabilly, ale nie lubię też punka. Moim ulubionym gatunkiem

|

| 153 |

+

>>> 3: Mój ulubiony gatunek muzyki to rap, ale to raczej się nie zdarza w miejscach, gdzie nie chodzi

|

| 154 |

+

>>> 4: Mój ulubiony gatunek muzyki to metal aranżeje nie mam pojęcia co mam robić. Co roku,

|

| 155 |

+

```

|

| 156 |

+

Ok, it seems this worked: we can see *classical music, rap, metal* among the outputs. Interestingly, *reggae* found a way through via a misspelling *reggea*. Take it as a caution to be careful with curating your bad word lists!

|

| 157 |

+

|

| 158 |

+

### Few Shot Learning

|

| 159 |

+

|

| 160 |

+

Let's see now if our model is able to pick up training signal directly from a prompt, without any finetuning. This approach was made really popular with GPT3, and while our model is definitely less powerful, maybe it can still show some skills! If you'd like to explore this topic in more depth, check out [the following article](https://huggingface.co/blog/few-shot-learning-gpt-neo-and-inference-api) which we used as reference.

|

| 161 |

+

|

| 162 |

+

```python

|

| 163 |

+

prompt = """Tekst: "Nienawidzę smerfów!"

|

| 164 |

+

Sentyment: Negatywny

|

| 165 |

+

###

|

| 166 |

+

Tekst: "Jaki piękny dzień 👍"

|

| 167 |

+

Sentyment: Pozytywny

|

| 168 |

+

###

|

| 169 |

+

Tekst: "Jutro idę do kina"

|

| 170 |

+

Sentyment: Neutralny

|

| 171 |

+

###

|

| 172 |

+

Tekst: "Ten przepis jest świetny!"

|

| 173 |

+

Sentyment:"""

|

| 174 |

+

|

| 175 |

+

res = generator(prompt, max_length=85, temperature=0.5, end_sequence='###', return_full_text=False, num_return_sequences=5,)

|

| 176 |

+

for x in res:

|

| 177 |

+

print(res[i]['generated_text'].split(' ')[1])

|

| 178 |

+

|

| 179 |

+

>>> Pozytywny

|

| 180 |

+

>>> Pozytywny

|

| 181 |

+

>>> Pozytywny

|

| 182 |

+

>>> Pozytywny

|

| 183 |

+

>>> Pozytywny

|

| 184 |

+

```

|

| 185 |

+

It looks like our model is able to pick up some signal from the prompt. Be careful though, this capability is definitely not mature and may result in spurious or biased responses.

|

| 186 |

+

|

| 187 |

+

### Zero-Shot Inference

|

| 188 |

+

|

| 189 |

+

Large language models are known to store a lot of knowledge in its parameters. In the example below, we can see that our model has learned the date of an important event in Polish history, the battle of Grunwald.

|

| 190 |

+

|

| 191 |

+

```python

|

| 192 |

+

prompt = "Bitwa pod Grunwaldem miała miejsce w roku"

|

| 193 |

+

input_ids = tokenizer.encode(prompt, return_tensors='pt')

|

| 194 |

+

# activate beam search and early_stopping

|

| 195 |

+

beam_outputs = model.generate(

|

| 196 |

+

input_ids,

|

| 197 |

+

max_length=20,

|

| 198 |

+

num_beams=5,

|

| 199 |

+

early_stopping=True,

|

| 200 |

+

num_return_sequences=3

|

| 201 |

+

)

|

| 202 |

+

|

| 203 |

+

print("Output:\

|

| 204 |

+

" + 100 * '-')

|

| 205 |

+

for i, sample_output in enumerate(beam_outputs):

|

| 206 |

+

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

|

| 207 |

+

|

| 208 |

+

>>> Output:

|

| 209 |

+

>>> ----------------------------------------------------------------------------------------------------

|

| 210 |

+

>>> 0: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie pod

|

| 211 |

+

>>> 1: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie pokona

|

| 212 |

+

>>> 2: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie,

|

| 213 |

+

```

|

| 214 |

+

|

| 215 |

+

## BibTeX entry and citation info

|

| 216 |

+

```bibtex

|

| 217 |

+

@misc{papuGaPT2,

|

| 218 |

+

title={papuGaPT2 - Polish GPT2 language model},

|

| 219 |

+

url={https://huggingface.co/flax-community/papuGaPT2},

|

| 220 |

+

author={Wojczulis, Michał and Kłeczek, Dariusz},

|

| 221 |

+

year={2021}

|

| 222 |

+

}

|

| 223 |

+

```

|

added_tokens.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f73effd45f282fdecbce3d5bda192b346d1e2e5dc024d4493ff276656001a5b6

|

| 3 |

+

size 24

|

allegro_reviews/config.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1ace5aef92f7880ccb5fd0e7c5f65556d6914dbd134fa1672b46a0533225c036

|

| 3 |

+

size 811

|

allegro_reviews/create_config_allegro.py

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from transformers import GPT2Config

|

| 2 |

+

|

| 3 |

+

model_dir = "." # ${MODEL_DIR}

|

| 4 |

+

|

| 5 |

+

config = GPT2Config.from_pretrained("gpt2", resid_pdrop=0.0, embd_pdrop=0.0, attn_pdrop=0.0)

|

| 6 |

+

config.save_pretrained(model_dir)

|

allegro_reviews/events.out.tfevents.1625481245.t1v-n-5d840006-w-0.20165.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:25a5b7d6e069647cf953e1684211cf4b87049ae4e05610e37b1047966bd36fcc

|

| 3 |

+

size 40

|

allegro_reviews/events.out.tfevents.1625482183.t1v-n-5d840006-w-0.22476.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ee76cbdc38f6bec33ee28c5225264d95b8d46c0a2941ce59fbe8893f798a3de8

|

| 3 |

+

size 40

|

allegro_reviews/events.out.tfevents.1625482418.t1v-n-5d840006-w-0.24291.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d20520f97baa97ebd08bbf9f66afb294613261a1661dbd9bf18ca39b4258e03d

|

| 3 |

+

size 40

|

allegro_reviews/tokenizer.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c1735fd67aa6471a45e6baf09a106fdd7545046f3a805b0820a5d5fcb34ccf76

|

| 3 |

+

size 1515050

|

allegro_reviews/train_tokenizer_allegro.py

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from datasets import load_dataset

|

| 2 |

+

from tokenizers import trainers, Tokenizer, normalizers, ByteLevelBPETokenizer

|

| 3 |

+

|

| 4 |

+

model_dir = "." # ${MODEL_DIR}

|

| 5 |

+

|

| 6 |

+

# load dataset

|

| 7 |

+

dataset = load_dataset("allegro_reviews", split="train")

|

| 8 |

+

|

| 9 |

+

# Instantiate tokenizer

|

| 10 |

+

tokenizer = ByteLevelBPETokenizer()

|

| 11 |

+

|

| 12 |

+

def batch_iterator(batch_size=1000):

|

| 13 |

+

for i in range(0, len(dataset), batch_size):

|

| 14 |

+

yield dataset[i: i + batch_size]["text"]

|

| 15 |

+

|

| 16 |

+

# Customized training

|

| 17 |

+

tokenizer.train_from_iterator(batch_iterator(), vocab_size=50265, min_frequency=2, special_tokens=[

|

| 18 |

+

"<s>",

|

| 19 |

+

"<pad>",

|

| 20 |

+

"</s>",

|

| 21 |

+

"<unk>",

|

| 22 |

+

"<mask>",

|

| 23 |

+

])

|

| 24 |

+

|

| 25 |

+

# Save files to disk

|

| 26 |

+

tokenizer.save(f"{model_dir}/tokenizer.json")

|

ckpt-7000/config.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2639ebf1ac7da23195fad0d3961b5051a0d21058e49211160e5ef0aaac020621

|

| 3 |

+

size 864

|

ckpt-7000/flax_model.msgpack

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d426922657592daf71b1b3b88dc9099cde4696dd4bc9b73556888b869decb784

|

| 3 |

+

size 497764120

|

ckpt-7000/opt_state.msgpack

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:186303788c88a7a93fdbcd9f97729a9041ebc27bcae5d66f5a60efd41c249912

|

| 3 |

+

size 995528480

|

ckpt-7000/training_state.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:72047b995289dd00fe7fd487482e84c2640772ccda4a8dd248fa4dcb041f71eb

|

| 3 |

+

size 14

|

config.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2639ebf1ac7da23195fad0d3961b5051a0d21058e49211160e5ef0aaac020621

|

| 3 |

+

size 864

|

convert_to_pytorch.py

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

from transformers import GPT2LMHeadModel

|

| 3 |

+

|

| 4 |

+

model = GPT2LMHeadModel.from_pretrained("./", from_flax=True)

|

| 5 |

+

model.save_pretrained("./")

|

create_config.py

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from transformers import GPT2Config

|

| 2 |

+

|

| 3 |

+

model_dir = "." # ${MODEL_DIR}

|

| 4 |

+

|

| 5 |

+

config = GPT2Config.from_pretrained("gpt2", resid_pdrop=0.0, embd_pdrop=0.0, attn_pdrop=0.0)

|

| 6 |

+

config.save_pretrained(model_dir)

|

events.out.tfevents.1625408122.t1v-n-5d840006-w-0.4909.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a4f3d64a34ca00c3be72105da0664557fff01b50fc812802428144cebca87b35

|

| 3 |

+

size 40

|

events.out.tfevents.1625465634.t1v-n-5d840006-w-0.10317.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4f8ebd5f1ae292f7e94936111697f725be49810a334c1913a7d4fa8520b588dc

|

| 3 |

+

size 61182

|

events.out.tfevents.1625468593.t1v-n-5d840006-w-0.12620.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1d4bb7621dd88a65736f55b305b26ebe509542fe9d277208ecf7b196c30b9a38

|

| 3 |

+

size 281684

|

events.out.tfevents.1625474538.t1v-n-5d840006-w-0.15018.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:973ce04e1a3c163e06174b81a01067ea2564aae7d7d23128f83236e096dcde6b

|

| 3 |

+

size 447251

|

events.out.tfevents.1625488422.t1v-n-5d840006-w-0.26135.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f1e4f47367e373d6e85d822a0489901f7914fdb74f55226fdf9660e27d7dbb70

|

| 3 |

+

size 40

|

events.out.tfevents.1625560105.t1v-n-5d840006-w-0.32054.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:145110e582bd6ffa469bd70a6994e8fb7607eef00b32bac499277125e0c76f08

|

| 3 |

+

size 147065

|

events.out.tfevents.1625561792.t1v-n-5d840006-w-0.33847.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:33764cac9e2b30a832ce9801b7a442440556a8ffd4944e94b65c8499dda6b5c9

|

| 3 |

+

size 147065

|

events.out.tfevents.1625563613.t1v-n-5d840006-w-0.39089.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b63c776e86a45848fc976c6ac2978493911c7582801e84fb8741d7d54b54c789

|

| 3 |

+

size 9512225

|

events.out.tfevents.1625645925.t1v-n-5d840006-w-0.21118.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a4a9d6cc813f0ab93d9a607b4959ad92e69e211feae5dd0ea6541ae546e5fe99

|

| 3 |

+

size 40

|

events.out.tfevents.1625646523.t1v-n-5d840006-w-0.24030.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d018c6ab315bf844d970f72e79fc335650adcdaf67093c5079fa6f802ccb2198

|

| 3 |

+

size 40

|

events.out.tfevents.1625648517.t1v-n-5d840006-w-0.3756.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0b27d199598f0cda4401d0990d1ea9ce3aef0865c8ce08b57a9f2f3c4ed4c780

|

| 3 |

+

size 40

|

events.out.tfevents.1625652835.t1v-n-5d840006-w-0.5744.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:51252162ec50163993bc7c712a4c9f79bb20e036bbca188cbec4181d2a33b0ee

|

| 3 |

+

size 40

|

events.out.tfevents.1625653275.t1v-n-5d840006-w-0.7412.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8d5b8a36445ca8ac2e698b10725deca9add93bc732622d141b9a4ed5c2a8d945

|

| 3 |

+

size 17423021

|

events.out.tfevents.1625829811.t1v-n-5d840006-w-0.18706.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9553b7cf078fa9afe1364d9edd4c482ae47089c72d79438382efd71e1c7e1d80

|

| 3 |

+

size 220906

|

events.out.tfevents.1625845134.t1v-n-5d840006-w-0.23366.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d05f73015c7d3fcef29fa5a3783fa71061e8f9058326d44181aab1e9499818f5

|

| 3 |

+

size 180

|

events.out.tfevents.1625848627.t1v-n-5d840006-w-0.26741.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7348cd7908eedb0f28fad1858fca9100d72f314ffdab2df7d5ddb14612d54910

|

| 3 |

+

size 180

|

events.out.tfevents.1625850120.t1v-n-5d840006-w-0.28732.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c4da6dbdd6b6875786a92d3c57d533a99ffb94a070dde23c30df16140b8bcab8

|

| 3 |

+

size 40

|

events.out.tfevents.1625850884.t1v-n-5d840006-w-0.30623.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:706b0e4a11361ad090a5255c0cbdb33fcb9acadfac53218442717c938279aefa

|

| 3 |

+

size 1029349

|

events.out.tfevents.1625862814.t1v-n-5d840006-w-0.33177.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7492648e3b1447fcf7d888343ff46e01fd3e13bd509d7bc9edc3ae9e8d12ced3

|

| 3 |

+

size 514496

|

events.out.tfevents.1625886911.t1v-n-5d840006-w-0.22644.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:295d632404865620140afe6b59ae69790e38090faddf0b8f823322037d68814f

|

| 3 |

+

size 8313281

|

events.out.tfevents.1626080463.t1v-n-5d840006-w-0.102926.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:18a9e63d81d2a4da3bbf9ce3622d6024691dc0ffe3e427bb28f64fe070157d69

|

| 3 |

+

size 40

|

events.out.tfevents.1626087582.t1v-n-5d840006-w-0.107030.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:76f4ca950c81e17eba462c306a30d8375b137702fdff33d20af833fbf2cd9842

|

| 3 |

+

size 1029207

|

events.out.tfevents.1626100637.t1v-n-5d840006-w-0.124085.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8382ca0e5eb6ced66cf9c2aa3c00157ef0f8bd8c199e15bbddde539a14789a71

|

| 3 |

+

size 11443277

|

events.out.tfevents.1626269397.t1v-n-5d840006-w-0.280196.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ed5103f11503f393b5bb5609f2052a4e4cd95a06b500a2f1e7eaa5d86235a741

|

| 3 |

+

size 13529845

|

events.out.tfevents.1626412410.t1v-n-5d840006-w-0.404523.3.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ce17d9c1158c87ad9958e3c38db67cfecef07098f86568962a1456c33417bba3

|

| 3 |

+

size 13529845

|

flax_model.msgpack

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8bdc00b2ca54a7c2a6d99e950fcb45f81ccdfc20652a6d5020643a9bc37ff77d

|

| 3 |

+

size 497764120

|

gender_bias.jpeg

ADDED

|

hate_by_ethnicity.png

ADDED

|

hate_by_gender.png

ADDED

|

merges.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:20832466756a988386123195ca6a4d1ecf92f0c1ff346872412fa54a8a2cb179

|

| 3 |

+

size 546522

|

papuGaPT2_bias_analysis.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

papuGaPT2_text_generation.ipynb

ADDED

|

@@ -0,0 +1,1051 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|