Update README.md

Browse files

README.md

CHANGED

|

@@ -27,4 +27,6 @@ The model architecture is LLaMA-1.3B and we adopt the [OpenLLaMA](https://github

|

|

| 27 |

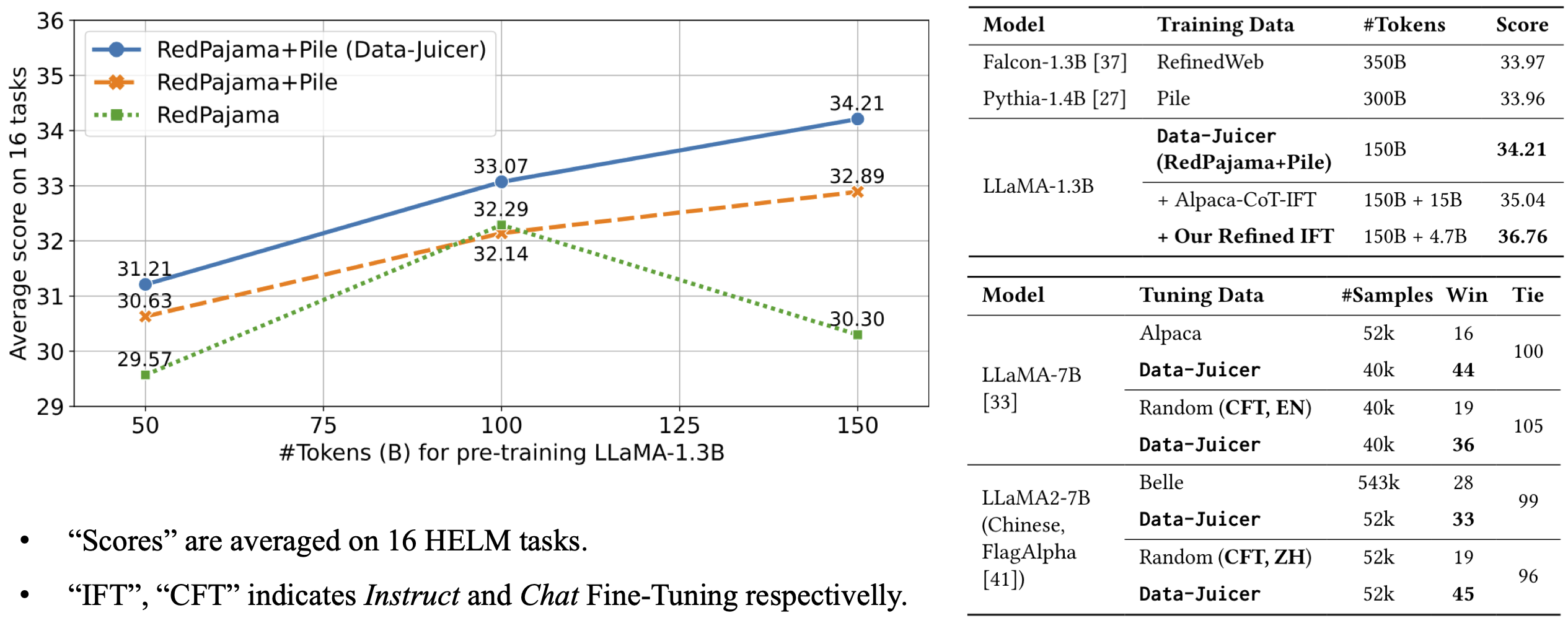

The model is pre-trained on 100B tokens of Data-Juicer's refined RedPajama and Pile.

|

| 28 |

It achieves an average score of 33.07 over 16 HELM tasks, beating LLMs trained on original RedPajama and Pile datasets.

|

| 29 |

|

| 30 |

-

For more details, please refer to our [paper](https://arxiv.org/abs/2309.02033).

|

|

|

|

|

|

|

|

|

| 27 |

The model is pre-trained on 100B tokens of Data-Juicer's refined RedPajama and Pile.

|

| 28 |

It achieves an average score of 33.07 over 16 HELM tasks, beating LLMs trained on original RedPajama and Pile datasets.

|

| 29 |

|

| 30 |

+

For more details, please refer to our [paper](https://arxiv.org/abs/2309.02033).

|

| 31 |

+

|

| 32 |

+

|